🧭 Summary: Causal AI in ACM CCS-Style Categories #

🎯 Definition #

Causal AI refers to computational systems that model, reason, and learn about cause-effect relationships. This enables systems to simulate interventions (What if…?), explain outcomes, and support decision-making in uncertain, complex environments.

📚 ACM CCS-Style Categories Where Causal AI Lives #

1. Computing Methodologies → Artificial Intelligence → Knowledge Representation and Reasoning (KR&R) #

- Focus: Representing cause-effect relationships using logic, graphs, and symbolic formalisms.

- Examples:

- Structural Causal Models (SCMs)

- Counterfactual and abductive reasoning

- Causal rules, DAG-based inference

2. Computing Methodologies → Machine Learning #

- Focus: Learning causal structures or estimating treatment effects from data.

- Examples:

- Causal discovery algorithms

- Uplift modeling

- Counterfactual prediction

3. Computing Methodologies → Probabilistic Modeling #

- Focus: Encoding causal dependencies with uncertainty.

- Examples:

- Bayesian Networks

- Structural Equation Models (SEMs)

- Probabilistic programming for causal reasoning

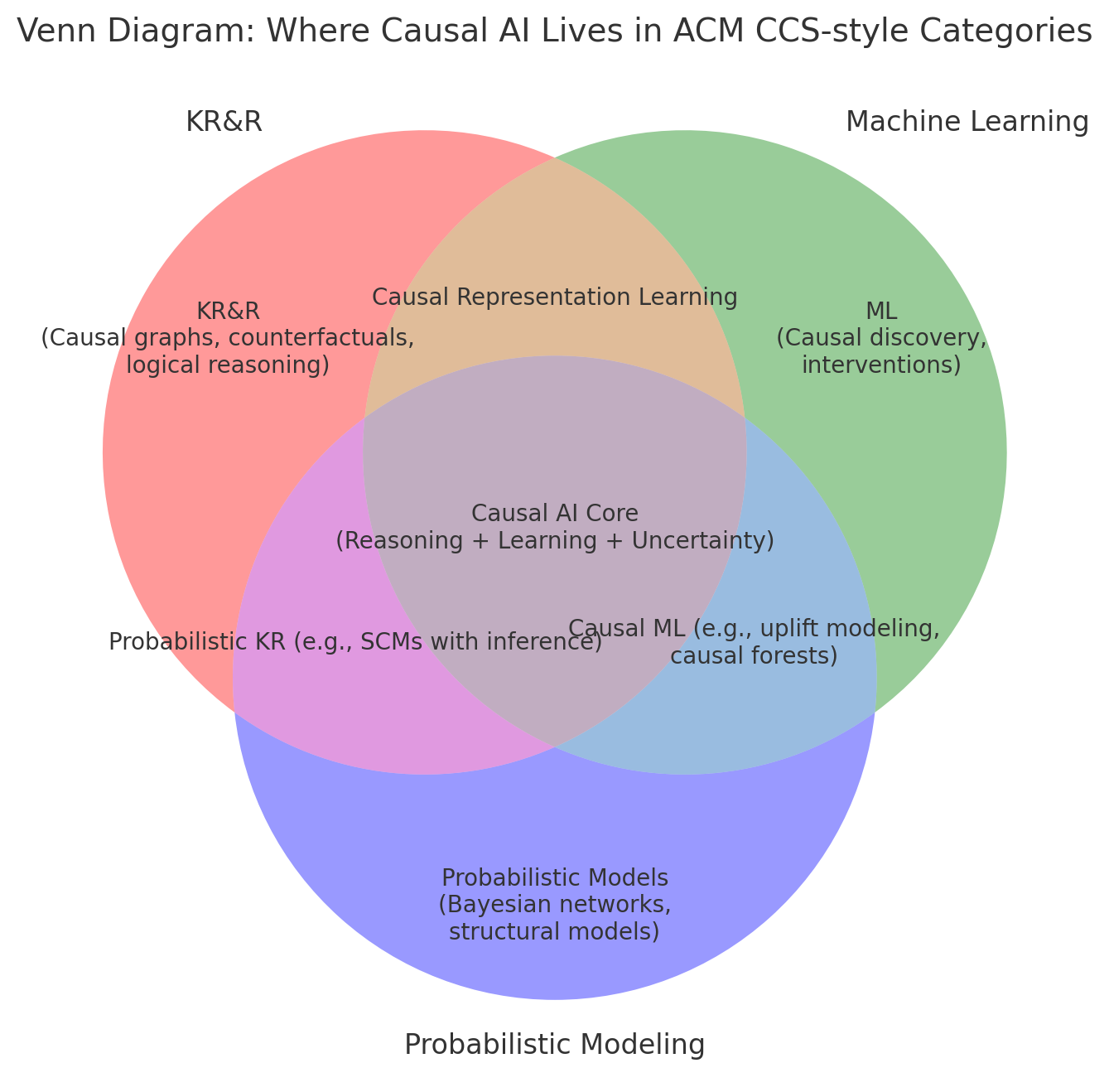

🌀 Venn Diagram of Causal AI’s Interdisciplinary Nature #

🔁 Core Intersections #

| Overlap Zone | Meaning |

|---|---|

| KR&R ∩ ML | Causal Representation Learning: Learning latent causal graphs that support symbolic reasoning. |

| KR&R ∩ Probabilistic | Probabilistic KR: Encoding causal knowledge with uncertainty, e.g., SCMs with probabilistic inference. |

| ML ∩ Probabilistic | Causal Machine Learning: Estimating treatment effects, counterfactual prediction, causal forests. |

| KR&R ∩ ML ∩ Probabilistic | 🔁 Causal AI Core: Full integration — systems that can represent, learn, and reason under uncertainty. |

🧩 Takeaway #

Causal AI is not confined to a single ACM category. It is a cross-cutting area spanning:

- the representation power of KR&R,

- the learning ability of ML,

- and the uncertainty modeling of probabilistic systems.

This makes it central to trustworthy, explainable, and decision-supportive AI, especially in high-stakes fields like healthcare, economics, and law.